SeachInform CISO Alex Drozd

There are a lot of rumors on the topic of deepfakes. I, personally, also try not to make fool of myself when commenting on the related issues. For instance, when answering the following question: “Is it possible to fake voice during a telephone call, in other words, on the spot?” The answer is that it is yet impossible. Another popular question: “Is the number of fraud cases involving deepfakes increasing?” The answer is that there is not enough statistics yet. In case the calculations method is not precise, the numbers may significantly distort the reality. “Is it possible to make a deepfake using only publicly available data?” It is more difficult to answer this question. In fact, it depends. Additional checks are required, because, basically, there is no unequivocal answer, if some extra conditions aren’t taken into consideration. So, I’ve set the following initial: is it possible to make a qualitative deepfake (video and audio) without deep knowledge of the subject?

As for a bonus, basing on the results of the research I’ve examined a video named Top 10 Deepfake Videos. With the help of these videos I recommend you to practice in searching for the signs of a fake.

Disclaimer! It is, indisputably, obvious that this article’s relevance will decrease rapidly. So, do not hesitate to share links to new instruments and services.

Relevance

Some people are very enthusiastic about technology development, but I, personally, have a different approach – new technologies always pose risks, which information security specialists have to deal with. Luckily, no signs of active deepfakes usage in the corporate segment have been obtained yet. However, risks of some detached attacks are quite high. Below you can find a few cases of deepfakes usage for deceit and fraud.

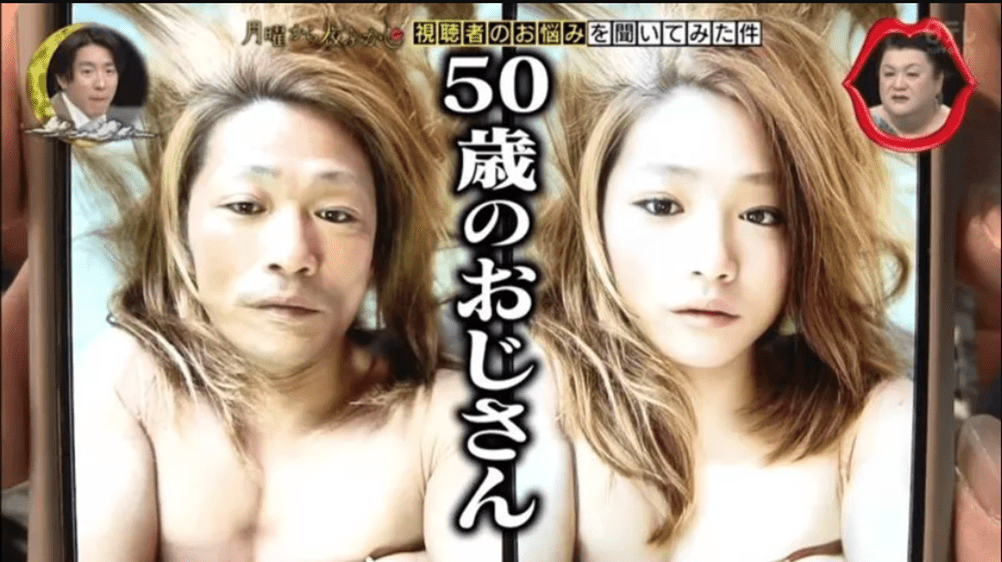

The first case

Let’s start with a harmless case. One popular Japanese blogger turned out to be a 50-years old man. He impersonated himself as a young girl with the help of FaceAPP filters. The truth was revealed accidentally – on one photo subscribers noticed reflection in the bike mirror – instead of a young girl’s face there was an adult man’s one. Previously, they were also confused when noticed a man’s hand on a photo.

The blogger lately told journalists that he used FaceApp filters in order to change his appearance. Apart from setting the “beauty” parameters they enable to change the sex and age of a person. According to the man, he just wanted to feel himself as an Internet celebrity, but decided that everyone wants to see a beautiful girl, not an old man.

The second case

In March 2019 the managing director of a British energy company was robbed. Overall, he lost €220 000 (approximately $240 000). The executive transferred money to a counterparty based in Hungary, because his boss, the Head of the parent company in Germany confirmed this instruction a few times. However, it turned out, that some creative intruder simply used specific AI-technology based software, and in the real-time mode faked the boss’s face and voice to demand the payment.

The message was sent from the German boss’s address, as for the confirmation, an e-mail with contacts was sent to the British director as well. The only suspicious attribute was the requirement to conduct the payment as quick as possible.

The third case

Fraudsters in China used to purchase people’s high resolution photos and their personal data (the price was $5-38 per set). They also purchased “specifically configured” smartphones (the price of such device was $250) which imitated the front camera work process. However, in fact, a generated deepfake video was transmitted to the addressee (government department).

This scheme enabled men to create shell companies on behalf of fake people and issue fake tax invoices to their clients. As a result, they managed to steal $76,2 mln.

The fourth case

Women in India via fake accounts got in touch with men. Intruders conducted video calls, talked with users and committed compromising actions. After such video calls men received specific notifications. Men were warned, that compromise videos would have been sent to their relatives and acquaintances if the ransom hadn’t been paid.

Previously, real women took part in such fraud schemes. However, fraudsters implemented technologies for deepfake creation and the technology of conversion text into the sound, which helped to create fake girls on the video. Some victims transferred intruders large sums of money (up to millions of rupees); however, some users didn’t let to trick themselves.

However, that’s not all. Details on quite similar different cases are revealed regularly. For instance, from time to time some studies, revealing that tests of identity verification, conducted by banks are too vulnerable to deepfake-attacks are published.

Video forgery

Lets start with theory. I referred to Deepfakes Generation and Detection: State-of-the-art, open challenges, countermeasures and way forward. In this work authors point out five techniques:

- Face-swapping. Basically, videos, created with the help of this particular technology are called deepfakes.

- Lip syncing. Transplantation techniques aren’t implemented in this case. The victim’s lips move synchronically with the fake audio track. Just like dubbing.

- Puppet master. Ideally, in this case all the donor’s face traits are transplanted to the victim’s face. This technique resembles the technology for “making people” in J.Cameron’s Avatar.

- Face Synthesis and Attribute Editing. In contrast to Puppet Master, in this case only one face is processed. A face may be aged or rejuvenated; it is possible to change the face colour; add a hairdress; draw glasses, a hat etc. Among relatively new tools I’d point Lensa photo editor, however, Snapchat with its filters is a more illustrative example in our case.

- Audio deepfakes. Authors referred all audio technologies to this category. Nevertheless, I’d personally divide it additionally into three categories: voice distorters, synthesizers, cloners.

All the tools can also be divided into two large groups:

- Tools, which make forgery in real-life mode.

- Tools, which produce result after the fact.

It’s more illustrative to examine each technique peculiarities by referring to some specific examples as well, so, let’s examine a few applications.

MSQRD

Despite the MSQRD idea is the same for a number of other tools (for instance, Reface), I’d like to start with MSQRD itself, because, if I’m not mistaking, it was the first publicly available program for deepfake creation.

This was a start-up, which was lately purchased for $1.000.000.00 by FaceBook.

In this video MSQRD uses Face-swapping\Face Synthesis and Attribute Editing technique.

What makes such tools attractive for users:

- No advanced knowledge is required for the tool usage. There is no need to additionally examine specific manuals.

- Low hardware threshold. Processing is performed at the service’s side.

- Insignificant time expenditures. The result is obtained almost immediately.

- Free to use.

Limitations of such tools:

- Not so much opportunities for creativity. The amount of heroes and scenes available for replacement is limited by the application developers.

DeepFaceLab

Probably, the most well-known, most described, fine-tuned etc. tool. A prime example of the Face-swapping technique, which is simply impossible to ignore

Manuals are available in many languages both in text formats and in the form of video-guides. Neural network processing and training may be run even on CPU, that is why I decided to test the tool myself. You may find the result below:

Well, it isn’t what I expected to see, definitely. The question arises, why? The answer is simple – I decided to follow the principle, mentioned in the beginning of the article – create high quality deepfake (video and audio) without precise examination of the process.

In case with the DeepFaceLab this method doesn’t work for sure. It is required to take quite many nuances into consideration. Before training the neural network it is required to consider at least the following:

- Quality of the “donor” and “victim” video (lighting, colour, etc.)

- Parameters of faces transplanted (in order to avoid problems which I experienced, when a small image was stretched and the quality was lost as a result)

- Head in all poses should be presented in the video.

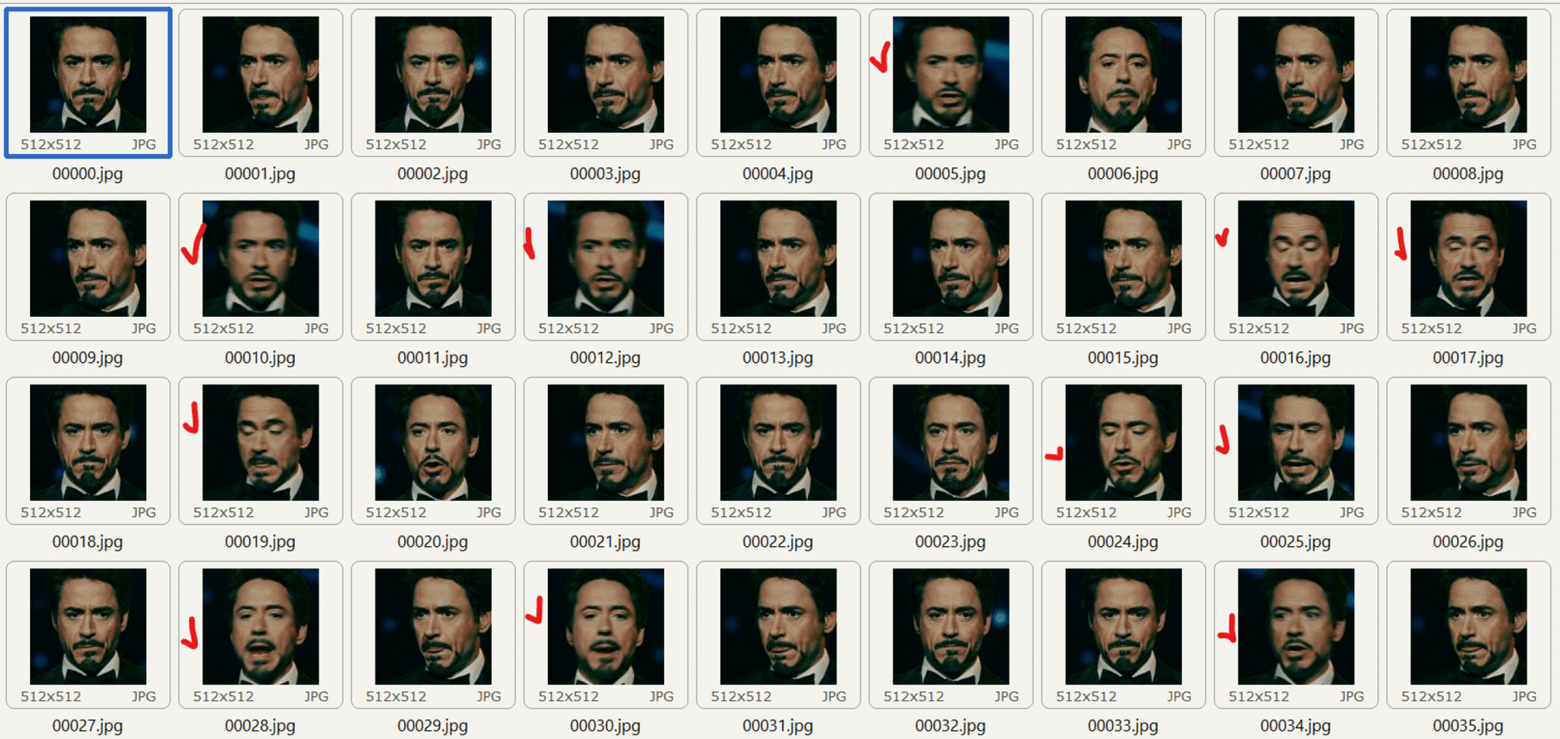

- Necessity to delete all the “bad” photos from the data set before proceeding to the neural network training (for instance, photos, where face isn’t depicted, blurred photos, photos with irrelevant items).

“Bad” photos, which should be removed from the data set are marked red

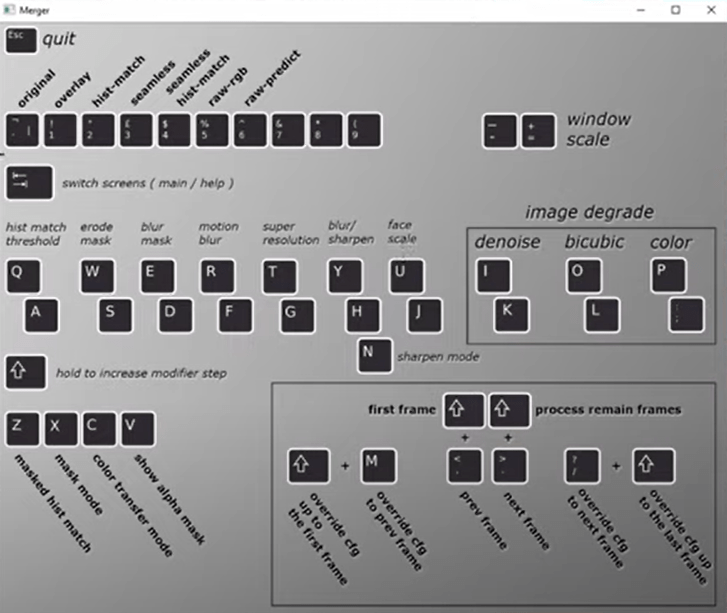

After the training is completed there is still plenty of work to be done. DeepFaceLab offers in-built Merger tool, which enables to perform frame by frame fitting of a “transplanted” face.

This is exactly where such parameters, as blurring, mask size, its position, color etc. are configured.

If a user chooses the quickest option, the result will be quite similar to mine.

What makes such tools attractive for users:

- A lot of opportunities for creativity. A user can develop random content.

- Low hardware requirements. However, it should be noticed that the less powerful the software is the longer the neural network training and other kind of processing last.

- Minimal digital footprint.

- Free of charge.

What is considered to be limitations of such tools:

- Plenty of knowledge required to work with the tool.

- It takes a lot of time.

- It is impossible to make a fake in real life time.

Wav2Lip

The time has come to discuss the peculiarities of the tool, which uses Lyp syncing technique. Although there was no appropriate hardware at hand, the tool enabled to have fun with Google Colab. With the help of the manual I’ve managed to achieve the following result.

It seems that the tool is quite sensitive to noises and may take them for speech. As a result, there are some silent fragments of the video, however, you can spot that lips are moving then. Probably, preliminary editing of audio with adding of sounds of complete silence between words will help to avoid false-positives. However, I’m not going to check it.

What makes such tools attractive for users:

- Opportunities for creativity. You can create random content.

- Less troubles with preliminary data preparation, if compared with DeepFaceLab.

- More authenticity, because instead of “full transplantation” only a small part of mouth is distorted.

- Minimum digital footprint (if you don’t use the Colab).

- Free of charge.

What is considered to be limitations of such tools:

- High knowledge threshold.

- High tech threshold (if you don’t use the Colab).

- It takes a lot of time.

- It is impossible to make the fake in real-life mode.

It is also desirable to avoid non-monotone backgrounds. Otherwise, the substitution area will be conspicuous.

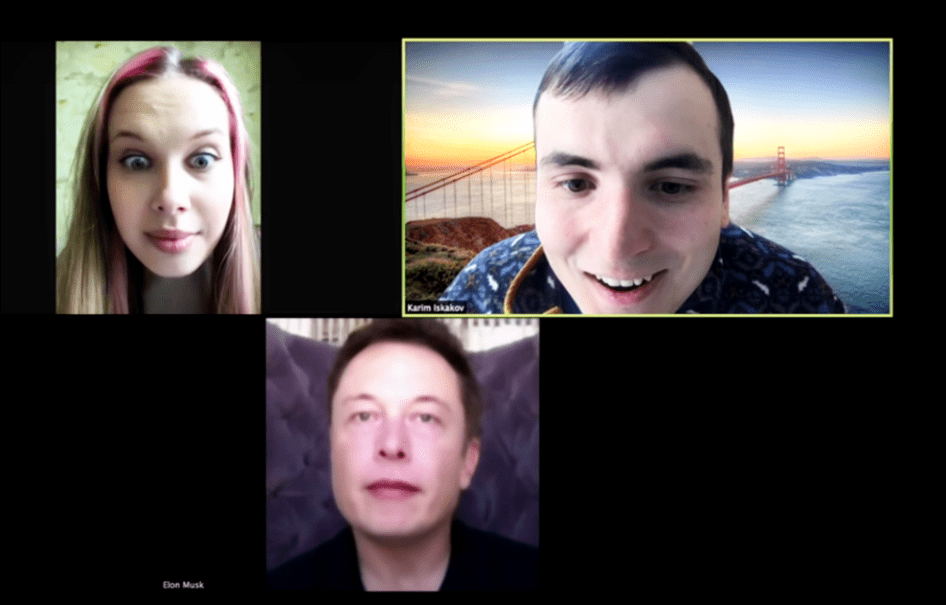

Avatarify

The tool uses Puppet master and enables to make a photo “live” in the real time mode. There is the famous video, which demonstrates the capabilities of this solution: Elon Musk joined the Zoom meeting. Please note: Elon Musk practically doesn’t move his head. In fact, only “his” eyes move. He’s just like a wax figure. However, there is a nuance: quite many people in real life do not mimicry much, so one may be deceived quite easily. The voice, however, was not faked at all – we will reveal more details about voice synthesis in real time mode later.

If we examine successful projects, we will find out that it is required to carefully choose suitable posture etc. to make sure that the avatar isn’t deformed. Have a look.

However, if we examine commercial projects the result turn out to be more impressive.

What makes such tools attractive for users:

- Fake may be created in real-life time mode.

- Freedom for creativity. The number of scenes and characters for fakes isn’t limited.

- Minimal digital footprint (for non-commercial tools).

- Free (for non-commercial tools).

- Low knowledge entrance threshold (for commercial tools).

What is considered to be limitations of such tools:

- High knowledge entrance threshold (for commercial tools).

- High hardware entrance threshold (for non-commercial tools).

- Paid (for commercial tools).

- Digital footprint (for commercial tools).

In terms of Face Synthesis and Attribute Editing techniques for deepfake creation I, personally, consider it as an analogue of Puppet Master with similar advantages, limitations and conclusions.

Audio forgery

The time has come to find out, how voice can be forged. According to the version about the first-ever case of deepfake implementation, the victim’s voice wasn’t cloned. Intruders found a parodist with the voice, similar to the victim’s one. The person lately imitated the victim’s accent, manner of speech and other attributes. In order to make everything even more realistic, the voice was additionally distorted. This is exactly what we will start with.

“Distorters”

They are often used in game industry – for instance, if it is important for players not to be recognized by their voice. Distorters work in real life mode and change the voice on the fly.

What makes such tools attractive for users:

- There are free tools available.

- They work in real life time mode.

- Low hardware requirements.

- Low knowledge entrance threshold.

What is considered to be limitations of such tools:

- You will hardly manage to imitate someone’s voice.

- Theoretically, faked voice may be converted back and the original voice of the speaker will be obtained.

Voice clonning + speech synthesizer

Speech synthesis logical development is a particular person speech synthesis. I’ve tried this free tool.

If simplified, the work logic may be described the following way:

- It is required to obtain the “victim’s” voice. The higher the audio quality, the better the forgery result is.

- Next it's required to prepare the data set. The next step is to cut the audio into separate phrases, transcribe it and save everything in a specific manner.

- Whoosh (the process of neural network training is activated).

- The next step is to write the text and a synthesizer will voice it with a faked voice.

There are plenty of instructions revealing which actions should be taken at each stage. I can’t share the result as I used Colab and didn’t manage to achieve anything significant. The basic prerequisite for that was that if I let the neural network to work for a while, it somehow failed and a mistake occurred. In case the training process didn’t last, the faked voice didn’t resemble the original.

What makes such tools attractive for users:

- Free of charge.

- Plenty of options for creativity. Any voice can be forged.

What is considered to be limitations of such tools:

- High knowledge entrance threshold.

- High technical requirements.

- A lot of time is required.

- Tools’ limitations (stresses, intonations etc.)

- They don’t operate in the real time mode.

It’s important to note, that there are also a few other techniques used for increasing deepfakes’ credibility, which users must be aware of if they wish to be able to counter deepfake related threats. For instance, there are techniques of deliberate deterioration of the deepfake in order to achieve a better result. For instance, in order to conceal drawbacks of the deepfake, generated on the spot during a call, a creator may add various interferences to the audio and video. The list includes but isn’t limited to:

- Extraneous noise

- Pixelation

- Signal interruption and so on.

Be attentive, as such attributes may indicate a deepfake.

How to reveal deepfakes without special tools and services? Practice.

So, having our hands full of theory, we can now develop a clear instruction. I will make a few clarifications first off all. There are many of those who can name a set of deepfakes’ attributes. What’s more, it shouldn’t be considered as fake information.

For instance, below is my personal list of attributes, which should be considered when working with audios:

- Expressiveness

- Shortness of breath

- Emotions

- Accents

- Parasite words

- Specific defects

- Tempo.

What’s more, in real life each tool, used for fakes creation has its own limitations.

However, there is a small chance that one is able to remember and recall all this data just in time.

That’s why I propose the following instruction for revealing deepfakes:

- Consider the context.

- Define the technique used.

- Recall the limitations.

- Look for the attributes, correspond with the second paragraph.

I’ll show, how it works with the help of the examples mentioned in the very beginning (the “Top 10 Deepfake” video). As additional tools any player capable of frame-by-frame video playback will be required.

Example 1

In this case, just like in case with many other forgeries, it is enough to check against the attributes in the Context step.

However, let’s follow the algorithm:

- The Face Swapping technique is used.

- Limitations apply to the position of the head when turning at large angles.

- The neural network simply doesn’t know how to draw correctly and tries to show both eyes as long as possible. As a result, the face resembles a pancake. How did this happen? Probably, not all the required photos were included into the data-set of Steve Buscemi’s photos, with which the neural network was provided.

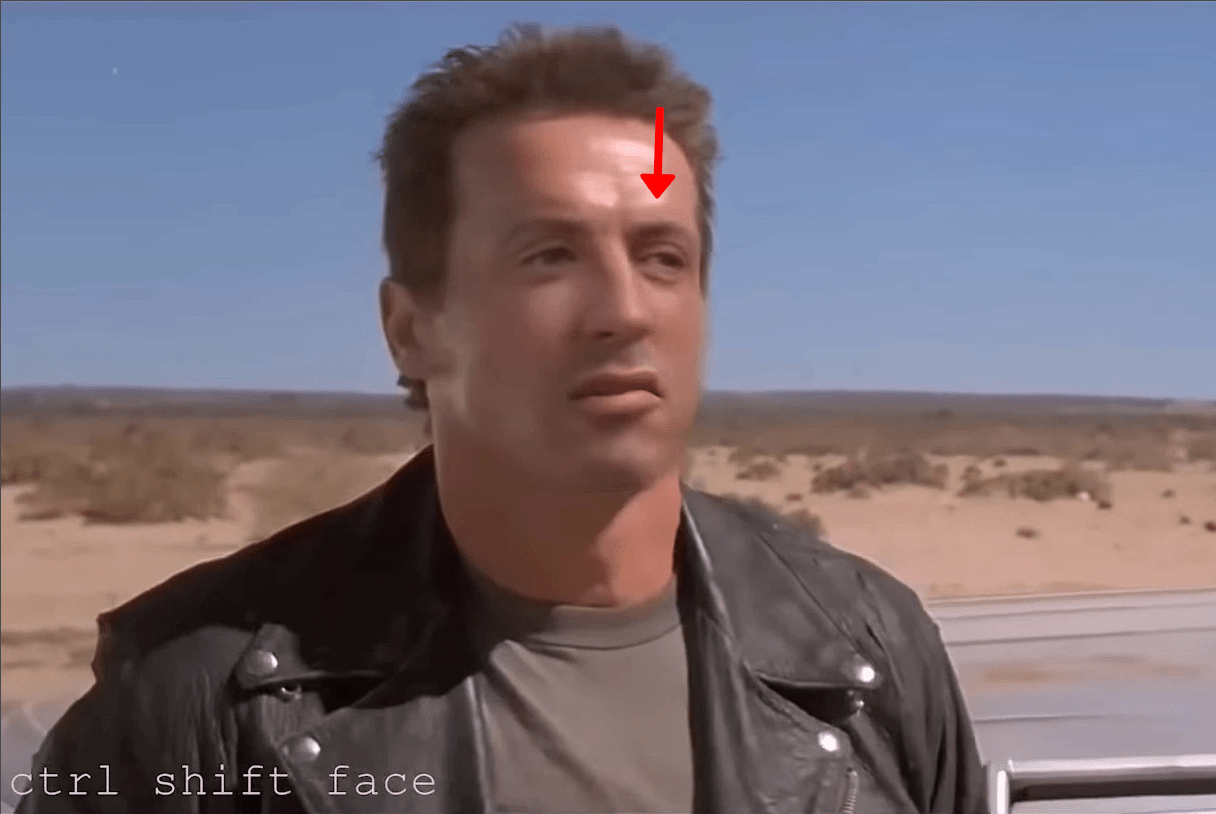

Example 2

If the playback speed is decreased or the video is played frame by frame, a few attributes may be obtained at once. For instance, skin doesn’t look the same.

What’s more, they overdid it a bit with the blurring effect. In dynamics, it caught my eye.

On close-ups the blurring effect is even more visible.

Once the network overdid it and tried to draw face where it simply couldn’t be at all.

On close-ups the imposed face trembling effect may be obtained as well.

Now let’s follow the algorithm:

- The Face-swapping technique is used.

- If donor’s and victim’s face parameters do not match exactly, the donor’s face will be blurred. For more precise fake of face the blurring of borders technique is implemented, however, it is easy to overdo with it. The face on each frame is imposed with no regard to past and future movements. As the result, the face may “tremble”.

- I’ve managed to obtain plenty of typical attributes:

- Forehead glistens, while other body parts don’t.

- The blurring on the “mask” borders is clearly visible.

- On close-ups it can be seen that the skin parameters differs.

- There are moments when “victim’s” head (forehead, neck, ears) don’t move, while “donors” face moves a bit.

- Trembling effect is present as well.

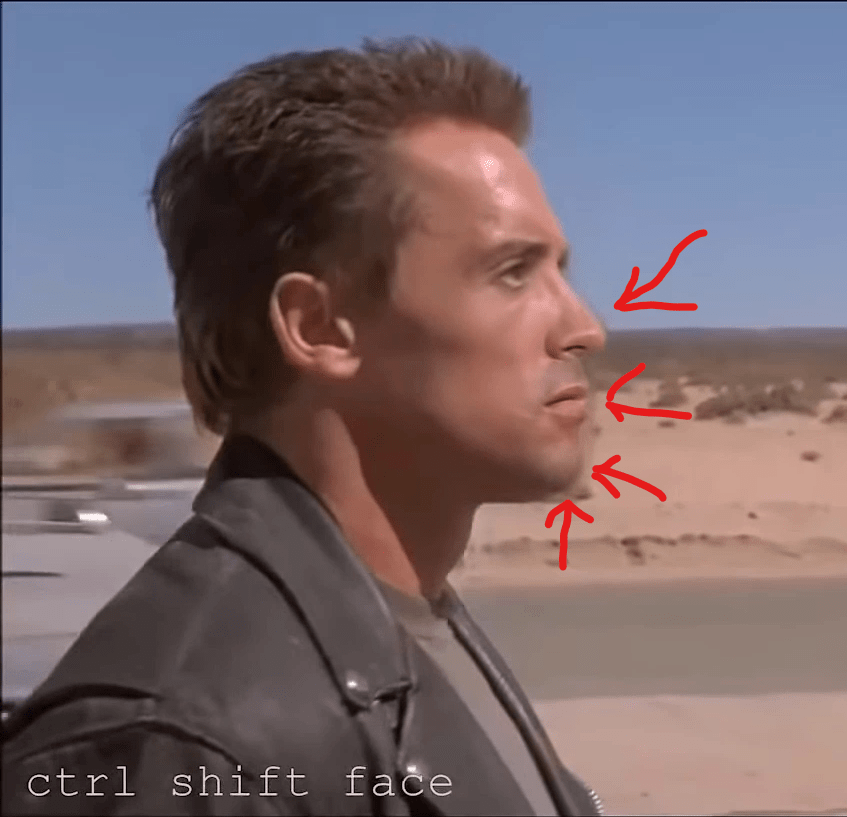

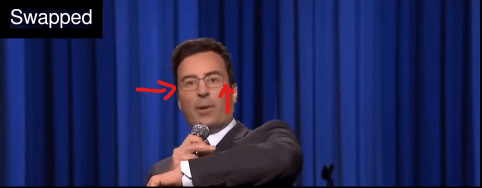

Example 3

- The face swapping technique is used.

- Such tools often fail to adequately draw artefacts (glasses, tattoes, piercing etc.). Problems often happen in cases when head is turned. What’s more, some issues occur when the distance between the object and camera is changed.

- I will show it on the example of some specific frames. First of all, glasses end pieces are missing.

Secondly, when head is turned quickly, not only the glasses, but also the face imposed vanishes. This fragment lasts for a second only, that’s why I’ve slowed it four times and made the frame hold for two seconds.

Third, if the distance between camera and object is changed, you can see how the fake face trembles and, finally, totally vanishes. I’ve stopped the last frames, so the moment of transition may be seen clearly.

Example 4

This is how Nicholas Cage may have looked like (if he wished to).

In fact, this one is a quite high-quality forgery, the fake face is at place almost all the time. Even when hair moves and partly closes the face. If viewed in dynamics, everything seems to be fine. However, let’s recall the face-swapping technique and limitations. Keeping this data in mind, let’s once more analyze, how the head turns. If viewed frame by frame, it can be seen, that on maximum angles the face looks like a pancake again. But the most important – there is a moment when the neural network obviously failed.

Example 5

Sometimes, the result of forgery is impressive. Some drawbacks may be found only on a few frames. For instance, in this particular example I’ve managed to find only one issue (there was a problem with nose in the end of the video). Have you noticed anything else?

Example 6

This is another example of high-quality forgery, where it is difficult to obtain any sign of a fake. However, I recommend to pay attention how the cheek swells.

If the video is opened in a video editor, the frame is looped and the reverse is added, the effect becomes even more visible.

Example 7

Let’s now examine a case, when another technique was used.

Puppet Master or Lip Syncing. Please pay attention to the movements of Kim Kardashian’s nose.

If reversed in a video editor, the effect is even stronger.

Conclusions

Basing on my personal observations and research, I dare to say, that in the nearest future there is little chance that we will face the “deepfakes” epidemic. However, this is true only if a few conditions will be met:

- If technical, time, knowledge and financial entry threshold will remain high.

- If the expenses on a deepfake creation will exceed profit of its usage.

- If tools for revealing of deepfakes will be not only created, but used as well.

Currently the basic recommendation for revealing deepfakes is the following: always be careful.

Continuing to stick to this recommendation, follow this algorithm:

- Consider the context.

- Identify the technique used (there are only 4 of them for videos).

- Recall the limitations.

- Search for attributes, stemming mentioned in the second paragraph.

As technology continues to evolve, information security is becoming increasingly important. Take care of your organisation's information security now. Try SearchInform products for free.

Subscribe to our newsletter and receive a bright and useful tutorial Explaining Information Security in 4 steps!

Subscribe to our newsletter and receive case studies in comics!